Methodology of ELI5ML

See more of ELI5ML HERE

Motivation

LLMs (large language models) have shown to be highly effective at summarization tasks. However, it’s important to keep in mind that they can also make mistakes. Therefore, it’s best to use LLMs as tools and carefully review their output. With the advancement of conversational LLMs like ChatGPT, it’s now easier for humans to give feedback and improve LLM performance in real-time, enabling a faster iterative process.

From my perspective, the near future will observe the emergence of Large Language Models (LLMs) like ChatGPT as an important tool to enhance human intelligence and improve productivity. The key factor here is that professionals must learn to use these tools effectively and responsibly. Verifying the output of these tools is a crucial step in this process. As LLMs become more advanced, humans must also strive to be more knowledgeable in order to distinguish between correct and incorrect answers, even if they sound plausible.

For that reason, I set out to build a collection of short articles that explain machine learning concepts in simple terms using ChatGPT. The collection is aptly named “ELI5ML” - Explain Like I’m 5 for/using Machine Learning. Insights from the process will help inform future use of the technology.

Methodology

There are 3 main steps in the creation of an article:

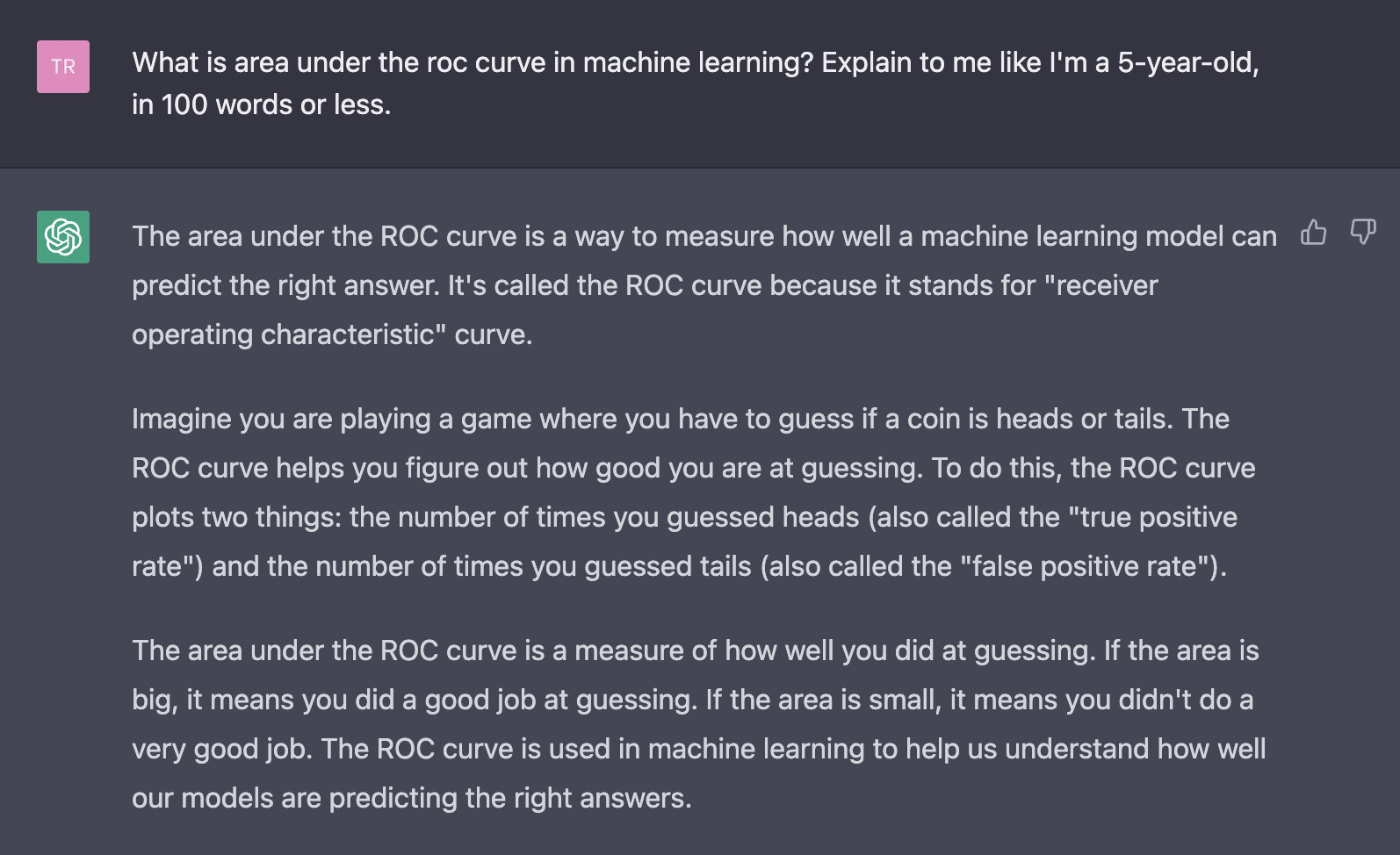

- Prompt engineering for the initial request/question (i.e. asking ChatGPT to write an article).

- Iterate the output:

- Assess ChatGPT’s output regarding its correctness, usefulness, diversity of ideas, etc.

- Provide feedbacks and instructions for ChatGPT to revise the article.

- Repeat the 2 steps above until marginal improvement plateau.

- Make final edits (e.g. correct mistakes that ChatGPT can’t get right).

Below is an example:

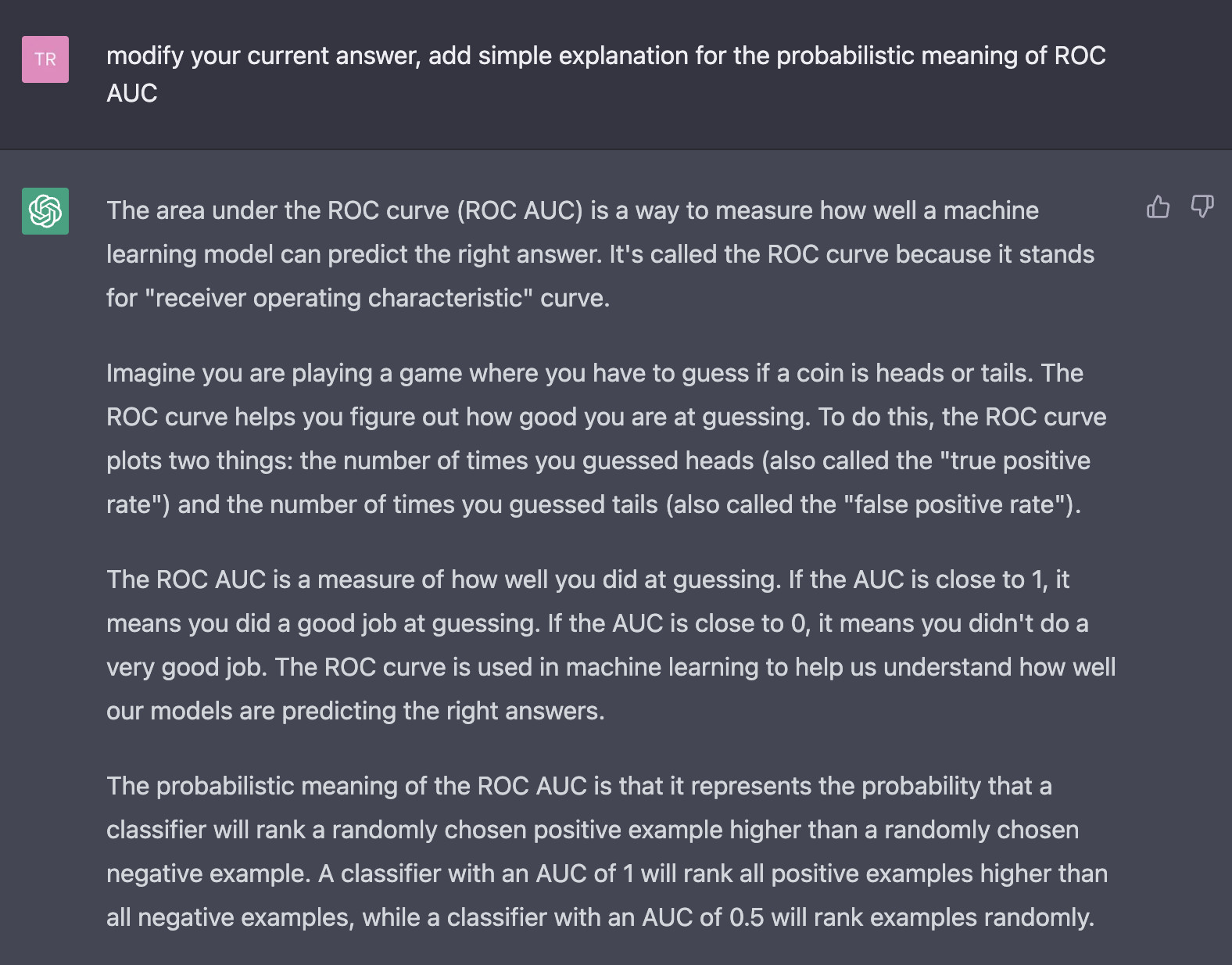

Overall it sounds good, but lack the probabilistic meaning of ROC AUC, which in my opinion is a crucial insight.

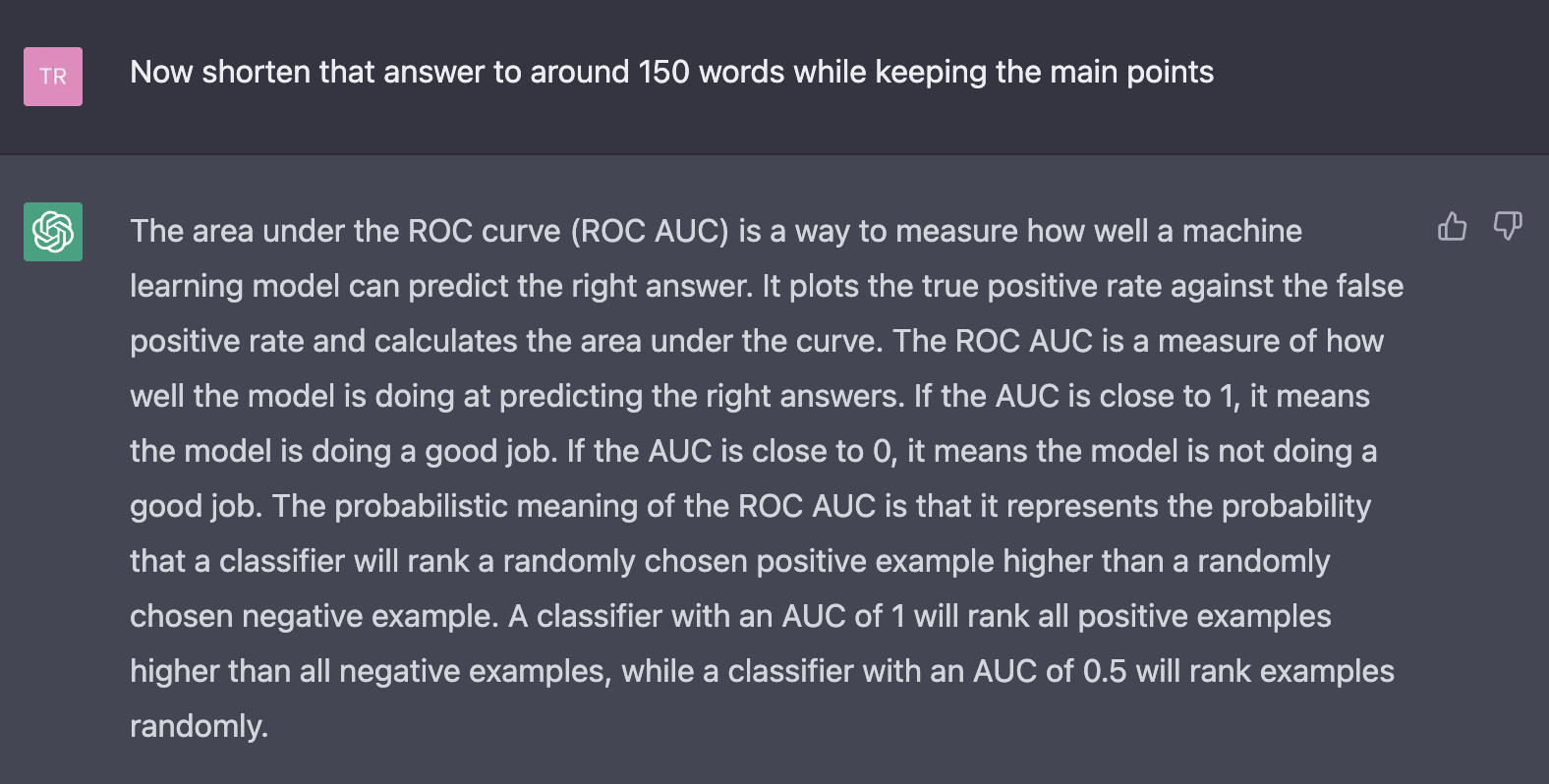

It is too lengthy now for the purpose of the article.

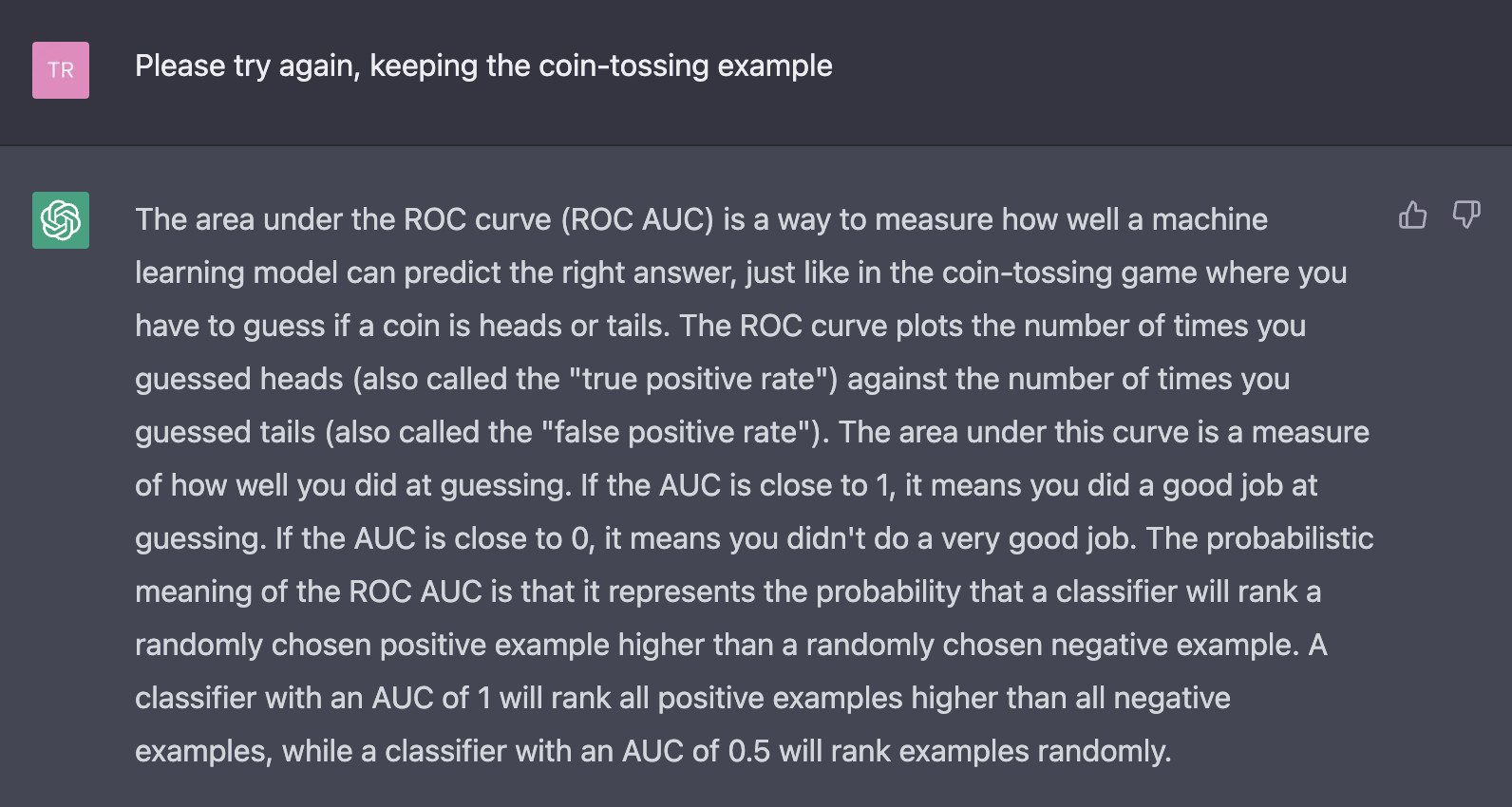

It removed the coin-tossing example, which is an excellent way of explaining the task of a classifier without any terminology.

Lastly, I made some final edits to correctly describe the ROC curve using the coin-tossing example. For some reason, ChatGPT could not get it right. This is the final product:

The area under the ROC curve (ROC AUC) is a way to measure how well a machine learning model can predict the right answer, just like in the coin-tossing game where you have to guess if a coin is heads or tails. The ROC curve plots the proportion of times you correctly guessed heads (also called the “true positive rate”) against the proportion of times you incorrectly guessed heads (also called the “false positive rate”).

The area under this curve is a measure of how well you did at guessing. If the AUC is close to 1, it means you did a good job at guessing. If the AUC is close to 0, it means you didn’t do a very good job.

The probabilistic meaning of the ROC AUC is that it represents the probability that a classifier will rank a randomly chosen positive example higher than a randomly chosen negative example. A classifier with an AUC of 1 will rank all positive examples higher than all negative examples, while a classifier with an AUC of 0.5 will rank examples randomly.

See more of ELI5ML HERE

Reference

- https://openai.com/blog/chatgpt/

- https://www.linkedin.com/feed/update/urn:li:activity:7008661018142810112/