LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS

| Paper Title | LORA: LOW-RANK ADAPTATION OF LARGE LANGUAGE MODELS |

| Authors | Edward Hu, Yelong Shen, Phillip Wallis, Zeyuan Allen-Zhu, Yuanzhi Li, Shean Wang, Lu Wang, Weizhu Chen |

| Date | 2021-10 |

| Link | https://arxiv.org/pdf/2106.09685.pdf |

Paper summary

- Introduction

- Fine-tuning LLMs is often computing-intensive due to the large number of model parameters. At the same time, we emperical evidence suggest that such training is often superfluous (due to over-parameterized models).

- Existing mitigations involve freezing the base network and training additional layers on top of it. These approaches often add latency to inference and under-perform compared to the finetuning baselines.

- LoRA attempt to solve those problems by training a low-rank weight matrix for each layer of the base network and combine the activation of the base network and the activation of the newly-trained low-rank weight

- Problem statement

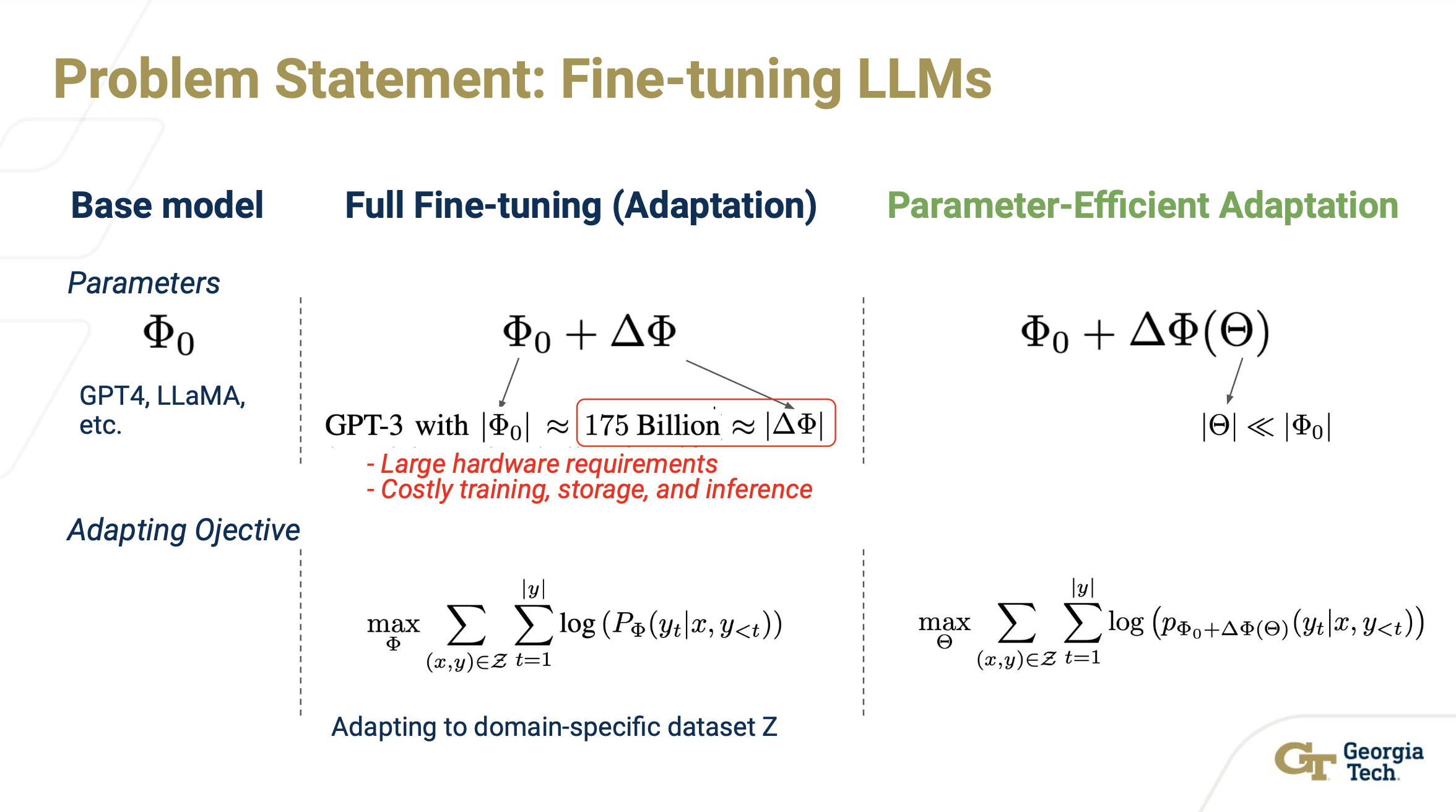

- For any base model (say GPT4, LLaMA, etc.), they have a set of parameter Phi.

- Fine-tuning is training a set of parameter DeltaPhi such that Phi + DeltaPhi performs the best on a task-specific or domain-specific dataset. However, DeltaPhi has the same number of parameter as Phi, which is not ideal.

- Parameter-efficient fine tuning (PEFT) assumes that DeltaPhi can actually be represented by a smaller set of parameter Theta. Different PEFT methods differs mostly on how to construct such model.

- Existing methods

- Prompt Engineering: Instruction with words.

- Continuous Prompts: Instead of discrete prompts (words), use trainable special vectors instead. Suitable for smaller network, not clear on scalability

- Adapter-based methods: Insert small, trainable adapter layers between the existing layers of the base network.

- LoRA method:

- Similar to Adapter-based method in that LoRA attempts to modify the base network on all levels.

- However, LoRA attempts to model the DeltaPhi (d_model x d_model) as a product of 2 smaller matrix A (d_model x r) and B (r x d_model)

- Number of trainable parameter reduced from O(d_model ^ 2) to O(d_model x r)

- The author specifically apply this low-rank decomposition into the linear layers in the multi-headed attention mechanism, namedly W_q, W_v, W_k, W_o.

- Results:

- LoRA was on-par or out-performed the fully-fine-tuned models on all experiments

- Ablation study shows that applying to all W_* in the attention yields the best result, and that very small rank (even r = 1) is enough to achieve the best performance.

Paper Review

Short Summary

The paper present LoRA, an application of low-rank estimation into Parameter-Efficient Fine Tuning (PEFT) problem that was able to achieve performance on-par or bettern than full fine-tuning. The authors discussed existing approaches including prompt engineering, continuous prompt, and adapter insertion and their limitations including extra token space, hard to scale, addition delay during inference. LoRA attempted to mitigate those weaknesses by assuming the adaptation network has a low-rank approximation, thus training such a model would be easier. Experiments and ablation study shows great performance, even with very low rank (r = 1).

Strengths

- Innovative approach to fine-tuning with multiple practical advantages

- Save on storage of multiple fine-tune models

- Can merge the weight during inference, thus requiring no additional latency

- Lower hardware requirements for tuning

- Ablation studies prove that low-rank adaptation is effective, even with r = 1

- A general method, can be applied to many problems and in combinations with other fine-tuning methods

Weaknesses

- Lower hardware requirements, but still very high for LLMs (GPU RAM enough to load the base model)

- Storage saving during inference only happens when there are multiple fine-tuned models

- The Ablation study, while being insightful, still doesn’t explore a lot of different scenarios (e.g. Different ranks for different metrics, Adapting the FFN instead of just Attention)

Reflection

I learned about PEFT when reading this paper, it is amazing how the community has developed techniques and libraries that enable access to LLMs to ordinary researchers, not just the big companies with abundant resources. A later paper (QLoRA) fixes one of the weaknesses of LoRA by using quantization to further reduce training and inference hardware requirements.

Most interesting thought/idea from reading this paper

I want to use this technique to adapt other models such as Diffusion models or DINO.